Think Tank

28 posts

Jun 15 2020

Docker and iptables

On the XWiki project, we use TestContainers to run our functional Selenium tests inside Docker containers, in order to test various configurations.

We've been struggling with various test flickering due to infra issues and it took us a long time to find out the issue. We could see for example problems from time to time starting the Ruyk docker container by TestContainers and we couldn't understand it. But we had plenty of other issues that were hard to track too.

After researching it we've found that the main issue was caused by the way we set up our iptables on our CI agents.

These agent machines have network interfaces exposed to the internet (on eth0 for example) and in order to be safe, our Infra Admin, had blocked all incoming and outgoing network traffic by default. The itables config was like this:

:FORWARD ACCEPT [0:0]

:OUTPUT DROP [0:0]

-A INPUT -i lo -j ACCEPT

-A INPUT -i br0 -j ACCEPT

-A INPUT -i tap0 -j ACCEPT

-A INPUT -i eth0 ... specific rules

-A OUTOUT -i eth0 ... specific rules

-A OUTPUT -o lo -j ACCEPT

-A OUTPUT -o br0 -j ACCEPT

-A OUTPUT -o tap0 -j ACCEPT

Note that since we block all interfaces by default, we need to add explicit rules to allow some (lo, br0, tap0 in our case).

The problem is that Docker doesn't add rules for the INPUT/OUTPUT chains! It only adds iptable NAT rules (see Docker and iptables for more details). And it creates new network interfaces such as docker0 and br*. Since by default we forbid INPUT/OUPUT on all interfaces, this meant that the connections between container and the host were refused on lots of cases.

So we changed it to:

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

-A INPUT -i eth0 ... specific rules

-A OUTOUT -i eth0 ... specific rules

-A INPUT -i eth0 -j DROP

-A OUTPUT -o eth0 -j DROP

This config allows all internal interfaces to accept incoming/outgoing connections (INPUT/OUTPUT) while making sure that the eth0 only exposes the minimum to the internet.

This solved our docker network issues.

Feb 18 2020

STAMP, the end

The STAMP research project has been a boon for the XWiki open source project. It has allowed the project to gain a lot in terms of quality. There have been lots of areas of improvements but the main domains that have substantially benefited the quality are:

- Increase of the test coverage. At the start of STAMP the XWiki project already had a substantial automated suite of tests covering 65.29% of the whole case base. Not only the project was able to increase it to over 71.4% (a major achievement for a large code base of 500K NCLOC such as XWiki) but also improve the quality of these tests themselves thanks to increasing the mutation score for them by using PIT/Descartes.

- XWiki’s build and CI now fail when either coverage or mutation score are reduced

- Addition of configuration testing. At the start of STAMP the XWiki project was only testing automatically a single configuration (latest HSQLDB + latest Jetty + latest Firefox). From time to time some testers were doing manual tests on different configurations but this was a very intensive process and very random and ad hoc. We were having a substantial number of issues raised in the XWiki issue tracker about configuration-related bugs. Thanks to STAMP the XWiki project has been able to cover all the configurations it supports and to execute its functional UI tests on all of them (every day, every week and every month based on different criteria). This is leading to a huge improvement in quality and in developer's productivity since developers don't need to manually setup multiple environments on their machine to test their new code (that was very time-consuming and difficult when onboarding new developers).

XWiki has spread its own engineering practices to the other STAMP project members and has benefitted from the interactions with the students, researchers and project members to accrue and firm up its own practices.

Last but not least, the STAMP research project has allowed the XWiki project to get time to work on testing in general, on its CI/CD pipeline, on adding new distribution packagings (such as the new Docker-based distribution which has increased XWiki's Active Installs) which were prerequisites for STAMP-developed tools, but which have tremendous benefits by themselves even outside of pure testing. Globally, this has raised the bar for the project, placing it even higher in the category of projects with a strong engineering practice with controlled quality. The net result is a better and more stable product with an increased development productivity. Globally STAMP has allowed a European software editor (XWiki SAS) and open source software (XWiki) to match and possibly even surpass non-European (American, etc) software editors in terms of engineering practices.

So while it's the end of STAMP, its results continue to live on inside the XWiki project.

I'm personally very happy to have been part of STAMP and I have learnt a lot about the topics at hand but also about European research projects (it was my first project).

Jun 09 2019

Scheduled Jenkinsfile

Context

On the XWiki project we use Jenkinsfiles in our GitHub repositories, along with "Github Organization" type of jobs so that Jenkins handles automatically creating and destroying jobs based on git branches in these repositories. This is very convenient and we have a pretty elaborate Jenksinfile (using shared global libraries we developed) in which we execute about 14 different Maven builds, some in series and others in parallel to validate different things, including execution of functional tests.

We recently introduced functional tests that can be executed with different configurations (different databases, different servlet containers, different browsers, etc). Now that represents a lot of combinations and we can't run all of these every time there's a commit in GitHub. So we need to run some of them only once per day, others once per week and the rest once per month.

The problem is that Jenkins doesn't seem to support this feature out of the box when using a Jenkinsfile. In an ideal world, Jenkins would support several Jenkinsfile to achieve this. Right now the obvious solution is to create manually new jobs to run these configuration tests. However, doing this removes the benefits of the Jenkinsfile, the main one being the automatic creation and destruction of job for branches. We started with this and after a few months it became too painful to maintain. So we had to find a better solution...

The Solution

Let me start by saying that I find this solution suboptimal as it's complex and fraught with several problems.

Generally speaking the solution we implemented is based on the Parameterized Shcheduler Plugin but the devil is in the details.

- Step 1: Make your job a parameterized job by defining a type variable that will hold what type of job you want to execute. In our case standard or docker-latest (to be executed daily), docker-all (to be executed weekly) and docker-unsupported (to be executed monthly). All the docker-* job types will execute our functional tests on various configurations. Also configure the parameterized scheduler plugin accordingly:private def getCustomJobProperties()

{

return [

parameters([string(defaultValue: 'standard', description: 'Job type', name: 'type')]),

pipelineTriggers([

parameterizedCron('''@midnight %type=docker-latest

@weekly %type=docker-all

@monthly %type=docker-unsupported'''),

cron("@monthly")

])

]

}You set this in the job with:

properties(getCustomJobProperties())Important note: The job will need to be triggered once before the scheduler and the new parameter are effective!

- Step 2: Based on the type parameter value, decide what to execute.For example:...

if (params.type && params.type == 'docker-latest') {

buildDocker('docker-latest')

}

... - Step 3: You may want to manually trigger your job using the Jenkins UI and decide what type of build to execute (this is useful to debug some test problems for example). You can do it this way:def choices = 'Standard\nDocker Latest\nDocker All\nDocker Unsupported'

def selection = askUser(choices)

if (selection == 'Standard') {

...

} else of (selection == 'Docker Latest') {

...

} else ...In our case askUSer is a custom pipeline library defined like this:

def call(choices)

{

def selection

// If a user is manually triggering this job, then ask what to build

if (currentBuild.rawBuild.getCauses()[0].toString().contains('UserIdCause')) {

echo "Build triggered by user, asking question..."

try {

timeout(time: 60, unit: 'SECONDS') {

selection = input(id: 'selection', message: 'Select what to build', parameters: [

choice(choices: choices, description: 'Choose which build to execute', name: 'build')

])

}

} catch(err) {

def user = err.getCauses()[0].getUser()

if ('SYSTEM' == user.toString()) { // SYSTEM means timeout.

selection = 'Standard'

} else {

// Aborted by user

throw err

}

}

} else {

echo "Build triggered automatically, building 'All'..."

selection = 'Standard'

}

return selection

}

Limitations

While this may sound like a nice solution, it has a drawback. Jenkins's build history gets messed up, because you're reusing the same job name but running different builds. For example, test failure age will get reset every time a different type of build is ran. Note that at least individual test history is kept.

Since different types of builds are executed in the same job, we also wanted the job history to visibly show when scheduled jobs are executed vs the standard jobs. Thus we added the following in our pipeline:

import com.jenkinsci.plugins.badge.action.BadgeAction

...

def badgeText = 'Docker Build'

def badgeFound = isBadgeFound(currentBuild.getRawBuild().getActions(BadgeAction.class), badgeText)

if (!badgeFound) {

manager.addInfoBadge(badgeText)

manager.createSummary('green.gif').appendText("<h1>${badgeText}</h1>", false, false, false, 'green')

currentBuild.rawBuild.save()

}

@NonCPS

private def isBadgeFound(def badgeActionItems, def badgeText)

{

def badgeFound = false

badgeActionItems.each() {

if (it.getText().contains(badgeText)) {

badgeFound = true

return

}

}

return badgeFound

}

Visually this gives the following where you can see information icons for the configuration tests (and you can hover over the information icon with the mouse to see the text):

What's your solution to this problem? I'd be very eager to know if someone has found a better solution to implement this in Jenkins.

Feb 09 2019

Global vs Local Coverage

Context

On the XWiki project, we've been pursuing a strategy of failing our Maven build automatically whenever the test coverage of each Maven module is below a threshold indicated in the pom.xml of that module. We're using Jacoco to measure this local coverage.

We've been doing this for over 6 years now and we've been generally happy about it. This has allowed us to raise the global test coverage of XWiki by a few percent every year.

More recently, I joined the STAMP European Research Project and one our KPIs is the global coverage, so I got curious and wanted to look at precisely how much we're winning every year.

I realized that, even though we've been generally increasing our global coverage (computed using Clover), there are times when we actually reduce it or increase very little, even though at the local level all modules increase their local coverage...

Reporting

So I implemented a Jenkins pipeline script that is using Open Clover, that runs every day and that gets the raw Clover data and generates a report. This report shows how the global coverage evolves, Maven module by Maven module and the contribution of each module to the global coverage.

Here's a recent example report comparing global coverage from 2019-01-01 to 2019-01-08, i.e. just 8 days.

The lines in red are modules that have had changes lowering the global coverage (even though the local coverage for these modules didn't change or even increased!).

Why?

Analyzing a difference

So once we find that a module has lowered the global coverage, how do we analyze where it's coming from?

It's not easy though! What I've done is to take the 2 Clover reports for both dates and compare all packages in the modume and pinpoint the exact code where the coverage was lowered. Then it's about knowing the code base and the existing tests to find why those places are not executed anymore by the tests. Note that Clover helps a lot since its reports can tell you which tests contribute to coverage for each covered line!

I've you're interested, check for example a real analysis of the xwiki-commons-job coverage drop.

Reasons

Here are some reasons I analyzed that can cause a module to lower the global coverage even though its local coverage is stable or increases:

- Some functional tests exercise (directly or indirectly) code lines in this module that are not covered by its unit tests.

- Then some of this code is removed because it's a) no longer necessary, or b) it's deprecated, or c) it's moved to another module. Since there are no unit tests that covers it in the module, the local coverage doesn't change but the global one for the module does and it's lowered. Note that the full global coverage may not change if the code is moved to another module which itself is covered by unit or functional tests.

- It could also happen that the code line was hit because of a bug somewhere. Not a bug that throws an Exception (since that would have failed the test) but a bug that results in some IF path entered and for example generating a warning log. Then the bug is fixed and thus the functional tests don't enter this IF anymore and the coverage is lowered...

(FTR this is what happened for the xwiki-commons-job coverage drop in the shown report above)

(FTR this is what happened for the xwiki-commons-job coverage drop in the shown report above)

- Some new module is added and its local coverage is below the average coverage of the other modules.

- Some module is removed and it had a higher than average global coverage.

- Some tests have failed and especially some functional tests are flickering. This will reduce the coverage of all module code lines that are only tested through tests located in other modules. It's thus important to check the test successes before "trusting" the coverage

- The local coverage is computed by Jacoco and we use instructions ratio, whereas the global coverage is computed using Clover which uses the TPC formula. There are cases where the covered instructions would stay stable but the TPC value would decrease. For example if a method is split into 2 methods, the covered byte case instructions remain the same but the TPC will decrease since the number of covered methods will stay fixed but the total number of methods will increase by 1...

- Rounding errors. We should ignore too low differences because it's possible that the local coverage would seem to remain the same (for example we round it to 2 digits) while the global coverage decreases (we round it to 4 digits in the shown report - we do that because the contribution of each module to the global coverage is low).

Strategy

So what strategy can we apply to ensure that the global coverage doesn't go down?

Here's the strategy that we're currently discussing/trying to setup on the XWiki project:

- We run the Clover Jenkins pipeline every night (between 11PM-8AM)

- The pipeline sends an email whenever the new report has its global TPC going down when compared with the baseline

- The baseline report is the report generated just after each XWiki release. This means that we keep the same baseline during a whole release

- We add a step in the Release Plan Template to have the report passing before we can release.

- The Release Manager is in charge of a release from day 1 to the release day, and is also in charge of making sure that the global coverage job failures get addressed before the release day so that we’re ready on the release day, i.e that the global coverage doesn't go down.

- Implementation detail: don’t send a failure email when there are failing tests in the build, to avoid false positives.

For reference, the various discussions on the XWiki list:

Conclusion

This experimentation has shown that in the case of XWiki, the global coverage is increasing consistently over the years, even though if, technically, it could go down. Now it also shows that with a bit more care and by ensuring that we always grow the global coverage between releases, we could make that global coverage increase a bit faster.

Additional Learnings

- Clover does a bad job for comparing reports.

- Don't trust Clover reports at package level either, they don't include all files.

- Test failures reported by the Clover report is not accurate at all. For example on this report Clover shows 276 failures and 0 errors. I checked the build logs and the reality is 109 failures and 37 errors. Some tests are reported as failing when they're passing.

- Here's an interesting example where Clover says that MacroBlockSignatureGeneratorTest#testIncompatibleBlockSignature() is failing but in the logs we have:[INFO] Running MacroBlockSignatureGeneratorTest

[INFO] Tests run: 3, Failures: 0, Errors: 0, Skipped: 0, Time elapsed: 0.023 s - in MacroBlockSignatureGeneratorTestWhat's interesting is that Clover reports:

And the test contains:

@Test

public void testIncompatibleBlockSignature() throws Exception

{

thrown.expect(IllegalArgumentException.class);

thrown.expectMessage("Unsupported block [org.xwiki.rendering.block.WordBlock].");

assertThat(signer.generate(new WordBlock("macro"), CMS_PARAMS), equalTo(BLOCK_SIGNATURE));

}

This is a [[known Clover issue with test that asserts exceptions>>https://community.atlassian.com/t5/Questions/JUnit-Rule-ExpectedException-marked-as-failure/qaq-p/76884]]...

)))

- Here's an interesting example where Clover says that MacroBlockSignatureGeneratorTest#testIncompatibleBlockSignature() is failing but in the logs we have:

Jul 20 2018

Resolving Maven Artifacts with ShrinkWrap... or not

On the XWiki project we wanted to generate custom XWiki WARs directly from our unit tests (to deploy XWiki in Docker containers automatically and directly from the tests).

I was really excited when I discovered the SkrinkWrap Resolver library. It looked exactly to be what I needed. I didn't want to use Aether (now deprecated) or the new Maven Resolver (wasn't sure what the state was and very little doc to use it).

So I coded (). Here are some extracts showing you how easy it is to use ShrinkWrap.

Example to find all dependencies of an Artifact:

String.format("org.xwiki.platform:xwiki-platform-distribution-war-dependencies:pom:%s", version));

...

protected List<MavenResolvedArtifact> resolveArtifactWithDependencies(String gav)

{

return getConfigurableMavenResolverSystem()

.resolve(gav)

.withTransitivity()

.asList(MavenResolvedArtifact.class);

}

protected ConfigurableMavenResolverSystem getConfigurableMavenResolverSystem()

{

return Maven.configureResolver()

.withClassPathResolution(true)

.withRemoteRepo(

"mavenXWikiSnapshot", "http://nexus.xwiki.org/nexus/content/groups/public-snapshots", "default")

.withRemoteRepo(

"mavenXWiki", "http://nexus.xwiki.org/nexus/content/groups/public", "default");

}

Here's another example to read the version from a resolved pom.xml file (didn't find how to do that easily with Maven Resolver BTW):

{

MavenResolverSystem system = Maven.resolver();

system.loadPomFromFile("pom.xml");

// Hack around a bit to get to the internal Maven Model object

ParsedPomFile parsedPom = ((MavenWorkingSessionContainer) system).getMavenWorkingSession().getParsedPomFile();

return parsedPom.getVersion();

}

And here's how to resolve a single Artifact:

String.format("org.xwiki.platform:xwiki-platform-tool-configuration-resources:%s", version));

...

protected File resolveArtifact(String gav)

{

return getConfigurableMavenResolverSystem()

.resolve(gav)

.withoutTransitivity()

.asSingleFile();

}

Pretty nice, isn't it?

It looked nice till I tried to use the generated WAR... Then, all my hopes disappeared... There's one big issue: it seems that ShrinkWrap will resolve dependencies by using a strategy different than what Maven does:

- Maven: Maven takes the artifact closest to the top (From the Maven web site: "Dependency mediation - this determines what version of a dependency will be used when multiple versions of an artifact are encountered. Currently, Maven 2.0 only supports using the "nearest definition" which means that it will use the version of the closest dependency to your project in the tree of dependencies.").

- ShrinkWrap: First artifact found in the tree (navigating each dependency node to the deepest).

So this led to a non-functional XWiki WAR with completely different JAR versions than what is generated by our Maven build.

To this day, I still don't know if that's a known bug and since nobody was replying to my thread on the ShrinkWrap forum I created an issue to track it. So far no answer. I hope someone from the ShrinkWrap project will reply.

Conclusion: Time to use the Maven Resolver library... Spoiler: I've succeeded in doing the same thing with it (and I get the same result as with mvn on the command line) and I'll report that in a blog post soon.

Jun 25 2018

Environment Testing Experimentations

I've been trying to define the best solution for doing functional testing in multiple environments (different DBs, Servlet containers, browsers) for XWiki. Right now on XWiki we test automatically on a single environment (Firefox, Jetty, HSQLDB) and we do the other environment tests manually.

So I've been going through different experimentations, finding out issues and limitations with them and progressing towards the perfect solution for us. Here are the various experiments we conducted. Note that this work is being done as part of the STAMP Research Project.

Here are the use cases that we want to support ideally:

- UC1: Fast to start XWiki in a given environment/configuration

- UC2: Solution must be usable both for running the functional tests and for distributing XWiki

- UC3: Be able to execute tests both on CI and locally on developer's machines

- UC4: Be able to debug functional tests easily locally

- UC5: Support the following configuration options (i.e we can test with variations of those and different versions): OS, Servlet container, Database, Clustering, Office Server, external SOLR, Browser

- UC6: Choose a solution that's as fast as possible for functional test executions

Experiments:

- Experimentation 1: CAMP from STAMP

- Experimentation 2: Docker on CI

- Experimentation 3: Maven build with Fabric8

- Experimentation 4: In Java Tests using Selenium Jupiter

- Experimentation 5: in Java Tests using TestContainers

- Conclusion

Experimentation 1: CAMP from STAMP

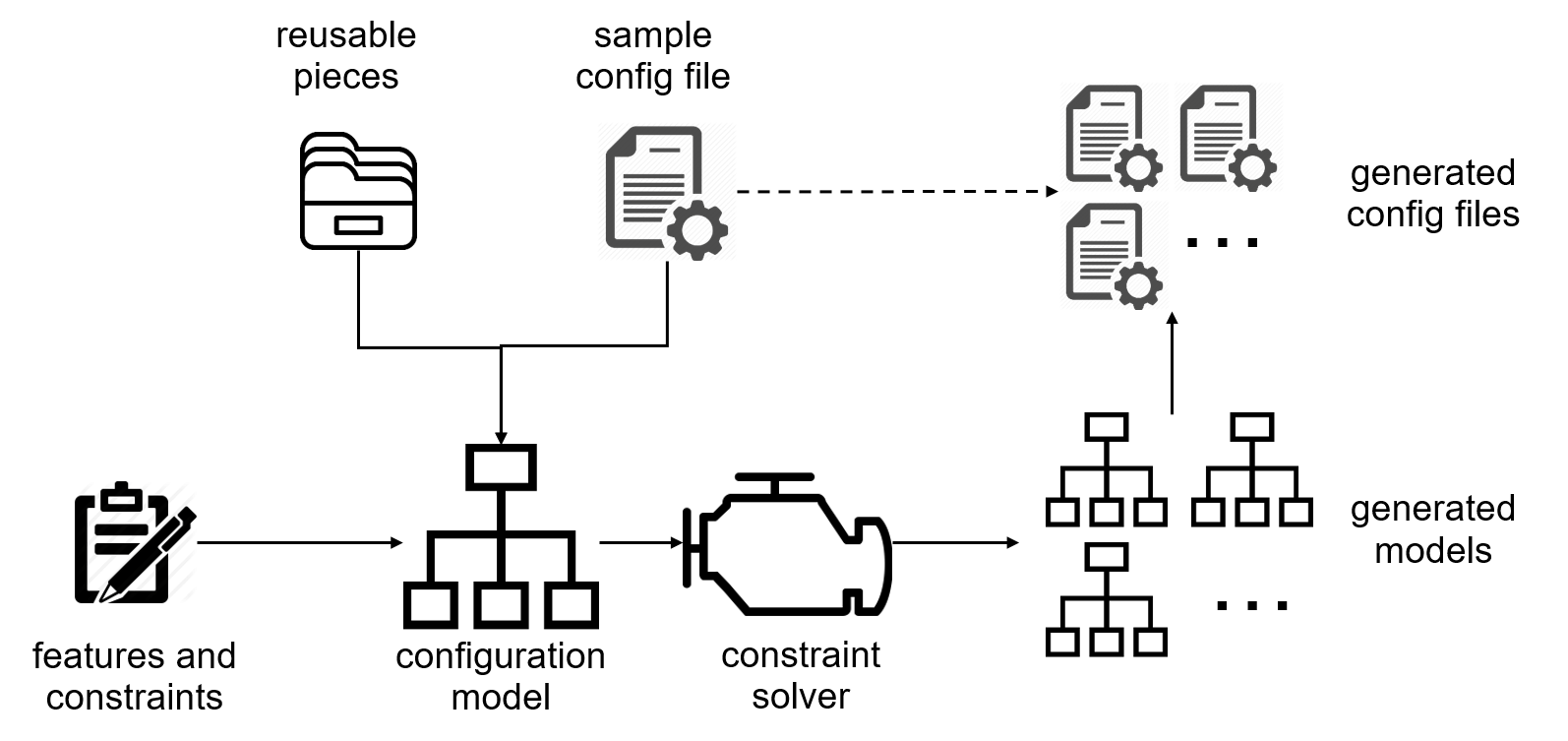

CAMP is a tool developed by some partners on the STAMP research project and it acts as a remote testing service. You give it some Dockerfile and it'll create the image, start a container, execute some commands (that you also provide to it and that are used to validate that the instance is working fine) and then stop the container. In addition it performs configuration mutation on the provided Dockerfile. This means it'll make some variations to this file, regenerate the image and re-run the container.

Here's how CAMP works (more details including how to use it on XWiki can be found on the CAMP home page):

Image from the CAMP web site

Limitations for the XWiki use case needs:

- Relies on a service. This service can be installed on a server on premises too but that means more infrastructure to maintain for the CI subsystem. Would be better if integrated directly in Jenkins for example.

- Cannot easily run on the developer machine which is important so that devs can test what they develop on various environments and so that they can debug reported issues on various environments. This fails at least UC3 and UC4.

- Even though mutation of configuration is an interesting concept, it's not a use case for XWiki which has several well-defined configurations that are supported. It's true that it could be interesting to have fixed topologies and only vary versions of servers (DB version, Servlet Container version and Java version - We don't need to vary Browser versions since we support only the latest version) but we think the added value vs the infrastructure cost might not be that interesting for us. However, it could still be interesting for example by randomizing the mutated configuration and only running tests on one such configuration per day to reduce the need of having too many agents and leaving them free for the other jobs.

Experimentation 2: Docker on CI

I blogged in the past about this strategy.

The main idea for this experiment was to use a Jenkins Pipeline with the Jenkins Plugin for Docker, allowing to write pipelines like this:

agent {

docker {

image 'xwiki-maven-firefox'

args '-v $HOME/.m2:/root/.m2'

}

}

stages {

stage('Test') {

steps {

docker.image('mysql:5').withRun('-e "MYSQL_ROOT_PASSWORD=my-secret-pw"') { c ->

docker.image('tomcat:8').withRun('-v $XWIKIDIR:/usr/local/tomcat/webapps/xwiki').inside("--link ${c.id}:db") {

[...]

wrap([$class: 'Xvnc']) {

withMaven(maven: mavenTool, mavenOpts: mavenOpts) {

[...]

sh "mvn ..."

}

}

}

}

}

}

}

}

Limitations:

- Similar to experimentation 1 with CAMP, this relies on the CI to execute the tests and doesn't allow developers to test and reproduce issues on their local machines. This fails at least UC3 and UC4.

Experimentation 3: Maven build with Fabric8

The next idea was to implement the logic in the Maven build so that it could be executed on developer machines. I found the very nice Fabric8 Maven plugin and came up with the following architecture that I tried to implement:

The main ideas:

- Generate the various Docker images we need (the Servlet Container one and the one containing Maven and the Browsers) using Fabric8 in a Maven module. For example to generate the Docker image containing Tomcat and XWiki:

pom.xml file:

[...]

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>docker-maven-plugin</artifactId>

<configuration>

<imagePullPolicy>IfNotPresent</imagePullPolicy>

<images>

<image>

<alias>xwiki</alias>

<name>xwiki:latest</name>

<build>

<tags>

<tag>${project.version}-mysql-tomcat</tag>

<tag>${project.version}-mysql</tag>

<tag>${project.version}</tag>

</tags>

<assembly>

<name>xwiki</name>

<targetDir>/maven</targetDir>

<mode>dir</mode>

<descriptor>assembly.xml</descriptor>

</assembly>

<dockerFileDir>.</dockerFileDir>

<filter>@</filter>

</build>

</image>

</images>

</configuration>

</plugin>

[...]The assembly.xml file will generate the XWiki WAR:

<assembly xmlns="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/plugins/maven-assembly-plugin/assembly/1.1.2 http://maven.apache.org/xsd/assembly-1.1.2.xsd">

<id>xwiki</id>

<dependencySets>

<dependencySet>

<includes>

<include>org.xwiki.platform:xwiki-platform-distribution-war</include>

</includes>

<outputDirectory>.</outputDirectory>

<outputFileNameMapping>xwiki.war</outputFileNameMapping>

<useProjectArtifact>false</useProjectArtifact>

</dependencySet>

</dependencySets>

<fileSets>

<fileSet>

<directory>${project.basedir}/src/main/docker</directory>

<outputDirectory>.</outputDirectory>

<includes>

<include>**/*.sh</include>

</includes>

<fileMode>755</fileMode>

</fileSet>

<fileSet>

<directory>${project.basedir}/src/main/docker</directory>

<outputDirectory>.</outputDirectory>

<excludes>

<exclude>**/*.sh</exclude>

</excludes>

</fileSet>

</fileSets>

</assembly>And all the Dockerfile and ancillary files required to generate the image are in src/main/docker/*.

- Then in the test modules, start the Docker containers from the generated Docker images. Check the full POM:[...]

<plugin>

<groupId>io.fabric8</groupId>

<artifactId>docker-maven-plugin</artifactId>

<executions>

<execution>

<id>start</id>

<phase>pre-integration-test</phase>

<goals>

<goal>start</goal>

</goals>

<configuration>

<imagePullPolicy>IfNotPresent</imagePullPolicy>

<showLogs>true</showLogs>

<images>

<image>

<alias>mysql-xwiki</alias>

<name>mysql:5.7</name>

<run>

[...]

<image>

<alias>xwiki</alias>

<name>xwiki:latest</name>

<run>

[...]

<image>

<name>xwiki-maven</name>

<run>

[...]

<volumes>

<bind>

<volume>${project.basedir}:/usr/src/mymaven</volume>

<volume>${user.home}/.m2:/root/.m2</volume>

</bind>

</volumes>

<workingDir>/usr/src/mymaven</workingDir>

<cmd>

<arg>mvn</arg>

<arg>verify</arg>

<arg>-Pdocker-maven</arg>

</cmd>

[...]

<execution>

<id>stop</id>

<phase>post-integration-test</phase>

<goals>

<goal>stop</goal>

</goals>

</execution>

</executions>

</plugin> - Notice that last container we start, i.e. xwiki-maven is configured to map the current Maven source as a directory inside the Docker container and it starts Maven inside the container to run the functional tests using the docker-maven Maven profile.

Limitations:

- The environment setup is done from the build (Maven), which means that the developer needs to start it before executing the test from his IDE. This can cause frictions in the developer workflow.

- We found issues when running Docker inside Docker and Maven inside Maven, specifically when having Maven start the docker container containing the browsers, itself starting a Maven build which starts the browser and then the tests. This resulted in the Maven build slowing down and cringing to a halt. This was probably due to the fact that Docker will use up a lot of memory by default and we would need to control all processes (Maven, Surefire, Docker, etc) and control very precisely the memory they use. Java10 would help but we're not using it yet and we're currently stuck on Java8.

Experimentation 4: In Java Tests using Selenium Jupiter

The idea is to use Selenium Jupiter to automatically start/stop the various Browsers to be used by Selenium directly from the JUnit5 tests.

Note that XWiki has its own test framework on top of Selenium, with a class named TestUtil providing various APIs to help set up tests. Thus we need to make this class available to the test too, by injecting it as a test method parameter for example. Thus I developed a XWikiDockerExtension JUnit5 extension that initializes the XWiki testing framework and that does this injection.

Here's how a very simple test looks like:

public class SeleniumTest

{

@BeforeAll

static void setup()

{

// TODO: move to the pom

SeleniumJupiter.config().setVnc(true);

SeleniumJupiter.config().setRecording(true);

SeleniumJupiter.config().useSurefireOutputFolder();

SeleniumJupiter.config().takeScreenshotAsPng();

SeleniumJupiter.config().setDefaultBrowser("firefox-in-docker");

}

@Test

public void test(WebDriver driver, TestUtils setup)

{

driver.get("http://xwiki.org");

assertThat(driver.getTitle(), containsString("XWiki - The Advanced Open Source Enterprise and Application Wiki"));

driver.findElement(By.linkText("XWiki's concept")).click();

}

}

Limitations:

- Works great for spawning Browser containers but doesn't support other types of containers such as DBs or Servlet Containers. Would need to implement the creation and start of them in a custom manner which is a lot of work.

Experimentation 5: in Java Tests using TestContainers

This idea builds on the Selenium Jupiter idea but using a different library, called TestContainers. It's the same idea but it's more generic since TestContainers allows creating all sorts of Docker containers (Selenium containers, DB containers, custom containers).

Here's how it works:

And here's an example of a Selenium test using it:

public class MenuTest

{

@Test

public void verifyMenu(TestUtils setup)

{

verifyMenuInApplicationsPanel(setup);

verifyMenuCreationInLeftPanelWithCurrentWikiVisibility(setup);

}

private void verifyMenuInApplicationsPanel(TestUtils setup)

{

// Log in as superadmin

setup.loginAsSuperAdmin();

// Verify that the menu app is displayed in the Applications Panel

ApplicationsPanel applicationPanel = ApplicationsPanel.gotoPage();

ViewPage vp = applicationPanel.clickApplication("Menu");

// Verify we're on the right page!

assertEquals(MenuHomePage.getSpace(), vp.getMetaDataValue("space"));

assertEquals(MenuHomePage.getPage(), vp.getMetaDataValue("page"));

// Now log out to verify that the Menu entry is not displayed for guest users

setup.forceGuestUser();

// Navigate again to the Application Menu page to perform the verification

applicationPanel = ApplicationsPanel.gotoPage();

assertFalse(applicationPanel.containsApplication("Menu"));

// Log in as superadmin again for the rest of the tests

setup.loginAsSuperAdmin();

}

...

Some explanations:

- The @UITest annotation triggers the execution of the XWiki Docker Extension for JUnit5 (XWikiDockerExtension)

- In turn this extension will perform a variety of tasks:

- Verify if an XWiki instance is already running locally. If not, it will generate a minimal XWiki WAR containing just what's needed to test the module the test is defined in. Then in turn, it will start a database and start a servlet container and deploy the XWiki WAR in it by using a Docker volume mapping

- Initialize Selenium and start a Browser (the exact browser to start can be controlled in a variety of ways, with system properties and through parameters of the @UITest annotation).

- It will also start a VNC docker container to record all the test execution, which is nice when one needs to debug a failing test and see what happened.

Current Status as of 2018-07-13:

- Browser containers are working and we can test in both Firefox and Chrome. Currently XWiki is started the old way, i.e. by using the XWiki Maven Package Plugin which generates a full XWiki distribution based on Jetty and HSQLDB.

- We have implemented the ability to fully generate an XWiki WAR directly from the tests (using the ShrinkWrapp library), which was the prerequisite for being able to deploy XWiki in a Servlet Container running in a Docker container and to start/stop it.

- Work in progress:

- Support an existing running XWiki and in this case don't generate the WAR and don't start/stop the DB and Servlet Container Docker containers.

- Implement the start/stop of the DB Container (MySQL and PostgreSQL to start with) from within the test using TestContainer's existing MySQLContainer and PostgresSQL containers.

- Implement the start/stop of the Servlet Container (Tomcat to start with) from within the test using TestContainer's GenericContainer feature.

Note that most of the implementation is generic and can be easily reused and ported to software other than XWiki.

Limitations:

- Only supports 2 browsers FTM: Firefox and Chrome. More will come. However it's going to be very hard to support browsers requiring Windows (IE11, Edge) or Mac OSX (Safari). Preliminary work is in progress in TestContainers but it's unlikely to result in any usable solution anytime soon.

- Note that forced us to allow using Selenium 3.x while all our tests are currently on Selenium 2.x. Thus we implemented a solution to have the 2 versions run side by side and we modified our Page Objects to use reflection and call the right Selenium API depending on the version. Luckily there aren't too many places where the Selenium API has changed from 2.x to 3.x. Our goal is now to write new functional UI tests in Selenium 3 with the new TestContainer-based tedting framework and progressively migrate tests using Selenium 2.x to this new framework.

- The full execution of the tests take a bit longer than what we used to have with a single environment made of HSQLDB+Jetty. Measures will be taken when the full implementation is finished to evaluate the total time it takes.

Future ideas:

- Discuss with the CAMP developers to see how their mutation engine could be executed as a Java library so that it could be integrated in the XWiki testing framework. Namely to issues were open on the CAMP issue tracker to discuss this:

Conclusion

At this point in time I'm happy with our last experiment and implementation based on TestContainers. It allows to run environment tests directly from your IDE with no other prerequisite than having Docker installed on your machine. This means it also works from Maven or from any CI. We need to finish the implementation and this will give the XWiki project the ability to run tests on various combinations of configurations.

Once this is done we should be able to tackle the next step which involves more exotic configurations such as running XWiki in a cluster, configuring a LibreOffice server to test importing office documents in the XWiki, and even configuring an external SOLR instance. However once the whole framework is in place, I don't expect this to cause any special problems.

Last, but not least, once we get this ability to execute various configurations, it'll be interesting to use a configuration mutation engine such as the one provided by CAMP in order to test various configurations in our CI. Since testing lots of them would be very costly in term of number of Agents required and CPU power, one idea is to have a job that executes, say, once per day with a random configuration selected and that reports how the tests perform in it.

May 09 2018

Automatic Test Generation with DSpot

DSpot is a mutation testing tool that automatically generates new tests from existing test suites. It's being developed as part of the STAMP European research project (to which XWiki SAS is participating to, represented by me).

Very quickly, DSpot works as follows:

- Step 1: Finds an existing test and remove some API call. Also remove assertions (but keep the calls on the code being tested). Add logs in the source to capture object states

- Step 2: Execute the test and add assertions that validate the captured states

- Step 3: Run a selector to decide which test to keep and which ones to discard. By default PITest/Descartes is used, meaning that only tests killing mutants than the original test didn't kill are kept. It's also possible to use other selector. For example a Clover selector exists that will keep the tests which generate more coverage than the original test.

- Step 4: Repeat (with different API calls removed) or stop if good enough.

For full details, see this presentation by Benjamin Danglot (main contributor of DSpot).

Today I tested the latest version of DSpot (I built it from its sources to have the latest code) and tried it on several modules of xwiki-common.

FTR here's what I did to test it:

- Cloned Dspot and built it with Maven by running mvn clean package -DskipTests. This generated a dspot/target/dspot-1.1.1-SNAPSHOT-jar-with-dependencies.jar JAR.

- For each module on which I tested it, I created a dspot.properties file. For example for xwiki-commons-core/xwiki-commons-component/xwiki-commons-component-api, I created the following file:project=../../../

targetModule=xwiki-commons-core/xwiki-commons-component/xwiki-commons-component-api

src=src/main/java/

srcResources=src/main/resources/

testSrc=src/test/java/

testResources=src/test/resources/

javaVersion=8

outputDirectory=output

filter=org.xwiki.*Note that project is pointing to the root of the project.

- Then I executed: java -jar /some/path/dspot/dspot/target/dspot-1.1.1-SNAPSHOT-jar-with-dependencies.jar --path-to-properties dspot.properties

- Then checked results in output/* to see if new tests have been generated

I had to test DSpot on 6 modules before getting any result, as follows:

- xwiki-commons-core/xwiki-commons-cache/xwiki-commons-cache-infinispan/: No new test generated by DSpot. One reason was because DSpot modifies the test sources and the tests in this module were extending Abstract test classes located in other modules and DSpot didn't touch those and was not able to modify them to generate new tests.

- xwiki-commons-core/xwiki-commons-component/xwiki-commons-component-api/: No new test generated by DSpot.

- xwiki-commons-core/xwiki-commons-component/xwiki-commons-component-default/: No new test generated by DSpot.

- xwiki-commons-core/xwiki-commons-component/xwiki-commons-component-observation/: No new test generated by DSpot.

- xwiki-commons-core/xwiki-commons-context/: No new test generated by DSpot.

- xwiki-commons-core/xwiki-commons-crypto/xwiki-commons-crypto-cipher/: Eureka! One test was generated by Dspot

Here's the original test:

public void testRSAEncryptionDecryptionProgressive() throws Exception

{

Cipher cipher = factory.getInstance(true, publicKey);

cipher.update(input, 0, 17);

cipher.update(input, 17, 1);

cipher.update(input, 18, input.length - 18);

byte[] encrypted = cipher.doFinal();

cipher = factory.getInstance(false, privateKey);

cipher.update(encrypted, 0, 65);

cipher.update(encrypted, 65, 1);

cipher.update(encrypted, 66, encrypted.length - 66);

assertThat(cipher.doFinal(), equalTo(input));

cipher = factory.getInstance(true, privateKey);

cipher.update(input, 0, 15);

cipher.update(input, 15, 1);

encrypted = cipher.doFinal(input, 16, input.length - 16);

cipher = factory.getInstance(false, publicKey);

cipher.update(encrypted);

assertThat(cipher.doFinal(), equalTo(input));

}

And here's the new test generated by DSpot, based on this test:

public void testRSAEncryptionDecryptionProgressive_failAssert2() throws Exception {

--> try {

Cipher cipher = factory.getInstance(true, publicKey);

cipher.update(input, 0, 17);

cipher.update(input, 17, 1);

cipher.update(input, 18, ((input.length) - 18));

byte[] encrypted = cipher.doFinal();

cipher = factory.getInstance(false, privateKey);

cipher.update(encrypted, 0, 65);

cipher.update(encrypted, 65, 1);

cipher.update(encrypted, 66, ((encrypted.length) - 66));

cipher.doFinal();

CoreMatchers.equalTo(input);

cipher = factory.getInstance(true, privateKey);

cipher.update(input, 0, 15);

cipher.update(input, 15, 1);

encrypted = cipher.doFinal(input, 16, ((input.length) - 16));

cipher = factory.getInstance(false, publicKey);

cipher.update(encrypted);

cipher.doFinal();

CoreMatchers.equalTo(input);

--> cipher.doFinal();

--> CoreMatchers.equalTo(input);

--> org.junit.Assert.fail("testRSAEncryptionDecryptionProgressive should have thrown GeneralSecurityException");

--> } catch (GeneralSecurityException eee) {

--> }

}

I've highlighted the parts that were added with the --> prefix. In short DSpot found that by calling cipher.doFinal() twice, it generates a GeneralSecurityException and that's killing some mutants that were not killed by the original test. Note that calling doFinal() resets the cipher, which explains why the second call generates an exception.

Looking at the source code, we can see:

public byte[] doFinal(byte[] input, int inputOffset, int inputLen) throws GeneralSecurityException

{

if (input != null) {

this.cipher.processBytes(input, inputOffset, inputLen);

}

try {

return this.cipher.doFinal();

} catch (InvalidCipherTextException e) {

throw new GeneralSecurityException("Cipher failed to process data.", e);

}

}

Haha... DSpot was able to automatically generate a new test that was able to create a state that makes the code go in the catch.

Note that it would have been even nicer if DSpot had put an assert on the exception message.

Then I wanted to verify if the test coverage had increased so I ran Jacoco before and after for this module:

- Before: 70.5%

- After: 71.2%

Awesome!

Conclusions:

- DSpot was able to improve the quality of our test suite automatically and as a side effect it also increased our test coverage (it's not always the case that new tests will increase the test coverage. DSpot's main intent, when executed with PIT/Descartes, is to increase the test quality - i.e. its ability to kill more mutants).

- It takes quite a long time to execute, globally on those 6 modules it took about 15 minutes to build them with DSpot/PIT/Descartes (when it takes about 1-2 minutes normally).

- DSpot doesn't generate a lot of tests: one test generated out of 100s of tests mutated (in this example session).

- IMO one good strategy to use DSpot is the following:

- Create a Jenkins pipeline job which executes DSpot on your code

- Since it's time consuming, run it only every month or so

- Have the pipeline automatically commit the generated tests to your SCM in a different test tree (e.g. src/test-dspot/)

- Modify your Maven build to use the Build Helper Maven plugin to add a new test source tree so that your tests run on both your manually-written tests and the ones generated by DSpot

- I find this an interesting strategy because it's automated and unattended. If you have to manually execute DSpot and look, find some generated tests and then manually incorporate them (with rewriting) to your existing test suite, then it's very tedious and time-consuming and IMO the ratio time spent vs added value is too low to be interesting.

WDYT?

EDIT: If you want to know more, check the presentation I gave at Devoxx France 2018 about New Generation of Tests.

Mar 20 2018

QDashboard & SonarQube

Here's a story from the past... ![]() (it happened 10 years ago).

(it happened 10 years ago).

Arnaud Heritier just dug up some old page on the Maven wiki that I had created back in 2005/2006.

I had written the Maven1 Dashboard plugin and when Maven2 came out I thought about rewriting it with a new more performant architecture and with more features.

At the time, I wanted to start working on this full time and I proposed the idea to several companies to see if they would sponsor its development (Atlassian, Cenqua, Octo Technology). They were all interested but for various reasons, I ended up joining the XWiki SAS company to work on the XWiki open source project.

So once I knew I wouldn't be working on this, I shared my idea publicly on the Maven wiki to see if anyone else would be interested to implement it.

Back then, I was happily surprised to see that Freddy Mallet actually implemented the idea:

In September 2006, I've discovered this page written by Vincent which has directly inspired the launch of an Open Source project. One year later we are pleased to announce that Sonar 1.0 release is now available. The missions of Sonar are to :

* Centralize and share quality information for all projects under continuous quality control

* Show you which ones are in pain

* Tell you what are the diseases

To do that, Sonar aggregates metrics from Checkstyle, PMD, Surefire, Cobertura / Clover and JavaNCSS. You can take a look to the screenshots gallery to get a quick insight.

Have fun.

Freddy

To give you the full picture, I'm now publishing something I never made public which are the slides that I wrote when I wanted to develop the idea:

Qdashboard and SonarQube have several differences. An important one is that in the idea of QDashboard, there was supposed to be several input sources such as mailing lists, issue tracker, etc. At the moment SonarQube derives metrics mostly from the SCM. But I'm sure that the SonarQube guys have a lot of ideas in store for the future ![]()

In 2013 I got a very nice present from SonarSource: a T-shirt recognizing me as #0 "employee" in the company, as the "Inceptor". That meant a lot to me.

Several years after, SonarQube has come a long way and I'm in awe of the great successful product it has become. Congrats guys!

Now, on to the following 10 years!

Onboarding Brainstorming

I had the honor of being invited to a seminar on "Automatic Quality Assurance and Release" at Dagstuhl by Benoit Baudry (we collaborate together on the STAMP research project). Our seminar was organized as un unconference and one session I proposed and led was the "Onboarding" one described below. The following persons participated to the discussion: V. Massol, D. Gagliardi, B. Danglot, H. Wright, B. Baudry.

Onboarding Discussions

When you're developing a project (be it some internal project or some open source project) one key element is how easy it is to onboard new users to your project. For open source projects this is essential to attract more contributors and have a lively community. For internal projects, it's useful to be able to have new employees or newcomers in general be able to get up to speed rapidly on your project.

This brainstorming session was about ideas of tools and practices to use to ease onboarding.

Here's the list of ideas we had (in no specific order):

- 1 - Tag issues in your issue tracker as onboarding issues to make it easy for newcomer to get started on something easy and be in success quickly. This also validates that they're able to use your software.

- 2 - Have a complete package of your software that can be installed and used as easily as possible. It should just work out of the box without having to perform any configuration or additional steps. A good strategy for applications is to provide a Docker image (or a Virtual Machine) with everything setup.

- 3 - Similarly, provide a packaged development environment. For example you can provide a VM with some preinstalled and configured IDE (with plugins installed and configured using the project's rules). One downside of such an approach is the time it takes to download the VM (which could several GB in size).

- 4 - A similar and possibly better approach would be to use an online IDE (e.g. Eclipse Che) to provide a complete prebuilt dev environment that wouldn't even require any downloading. This provides the fastest dev experience you can get. The downside is that if you need to onboard a potentially large number of developers, you'll need some important infra space/CPU on your server(s) hosting the online IDE, for hosting all the dev workspaces. This makes this option difficult to implement for open source projects for example. But it's viable and interesting in a company environment.

- 5 - Obviously having good documentation is a given. However too many projects still don't provide this or only provide good user documentation but not good developer documentation with project practices not being well documented or only a small portion being documented. Specific ideas:

- Document the code structure

- Document the practices for development

- Develop a tool that supports newcomers by letting them know when they follow / don't follow the rules

- Good documentation shall explicit assumptions (e.g. when you read this piece of documentation, I assume that you know X and Y)

- Have a good system to contribute to the documentation of the project (e.g. a wiki)

- Different documentation for users and for developers

- 6 - Have homogeneous practices and tools inside a project. This is especially true in a company environment where you may have various projects, each using its own tools and practices, making it harder to move between projects.

- 7 - Use standard tools that are well known (e.g. Maven or Docker). That increases the likelihood that a newcomer would already know the tool and be able to developer for your project.

- 8 - It's good to have documentation about best practices but it's even better if the important "must" rules be enforced automatically by a checking tool (can be part of the build for example, or part of your IDE setup). For example instead of saying "this @Unstable annotation should be removed after one development cycle", you could write a Maven Enforcer rule (or a Checkstyle rule, or a Spoon rule) to break the build if it happens, with a message explaining the reason and what is to be done. Usually humans may prefer to have a tool telling them that than a way telling them that they haven't been following the best practices documented at such location...

- 9 - Have a bot to help you discover documentation pages about a topic. For example by having a chat bot located in the project's chat, that when asked about will give you the link to it.

- 10 - Projects must have a medium to ask questions and get fast answers (such as a chat tool). Forum or mailing lists are good but less interesting when onboarding when the newcomer has a lot of questions in the initial phase and requires a conversation.

- 11 - Have an answer strategy so that when someone asks a question, the doc is updated (new FAQ entry for example) so that the next person who comes can find the answer or be given the link to the doc.

- 12 - Mentoring (human aspect of onboarding): have a dedicated colleague to whom you're not afraid to ask questions and who is a referent to you.

- 13 - Supporting a variety of platforms for your software will make it simpler for newcomers to contribute to your project.

- 14 - Split your projects into smaller parts. While it's hard and a daunting experience to contribute to the core code of a project, if this project has a core as small as possible and the rest is made of plugins/extensions then it becomes simpler to start contributing to those extensions first.

- 15 - Have some interactive tutorial to learn about your software or about its development. A good example of nice tutorial can be found at www.katacoda.com (for example for Docker, https://www.katacoda.com/courses/docker).

- 16 - Human aspect: have an environment that makes you feel welcome. Work and discuss how to best answer Pull Requests, how to communicate when someone joins the project, etc. Think of the newcomer as you would a child: somebody who will occasionally stumble and need encouragment. Try to have as much empathy as possible.

- 17 - Make sure that people asking questions always get an answer quickly, perhaps by establishing a role on the team to ensure answers are provided.

- 18 - Last but not least, an interesting thought experiment to verify that you have some good onboarding processes: imagine that 1000 developers join your project / company on the same day. How do you handle this?

Onboarding on XWiki

I was also curious to see how those ideas apply to the XWiki open source project and what part we implement.

| Ideas | Implemented on XWiki? |

|---|---|

| 1 - Tag simple issues | |

| 2 - Complete install package | |

| 3 - Dev packaged environment | |

| 4 - Online IDE onboarding | |

| 5 - Good documentation | |

| 6 - Have homogeneous practices and tools inside a project | |

| 7 - Use standard tools that are well known | |

| 8 - Automatically enforced important rules | |

| 9 - Have a bot to help you discover documentation pages about a topic | |

| 10 - Projects must have a medium to ask questions and get fast answers | |

| 11 - Have an answer strategy so that when someone asks a question | |

| 12 - Mentoring (human aspect of onboarding) | |

| 13 - Supporting a variety of platforms for your software | |

| 14 - Split your projects into smaller parts | |

| 15 - Have some interactive tutorial to learn about your software | |

| 16 - Human aspect: have an environment that makes you feel welcome. | |

| 17 - Make sure that people asking questions always get an answer quickly | |

| 18 - 1000 devs joining at once experiment |

So globally I'd say XWiki is pretty good at onboarding. I'd love to hear about things that we could improve on for onboarding. Any ideas?

If you own a project, we would be interested to hear about your ideas and how you perform onboarding. You could also use the list above as a way to measure your level of onboarding for your project and find out how you could improve it further.

Nov 17 2017

Controlling Test Quality

We already know how to control code quality by writing automated tests. We also know how to ensure that the code quality doesn't go down by using a tool to measure code covered by tests and fail the build automatically when it goes under a given threshold (and it seems to be working).

Wouldn't it be nice to be also able to verify the quality of the tests themselves? ![]()

I'm proposing the following strategy for this:

- Integrate PIT/Descartes in your Maven build

- PIT/Descartes generates a Mutation Score metric. So the idea is to monitor this metric and ensure that it keeps going in the right direction and doesn't go down. Similar than watching the Clover TPC metric and ensuring it always go up.

- Thus the idea would be, for each Maven module to set up a Mutation Score threshold (you'd run it once to get the current value and set that value as the initial threshold) and have the PIT/Descartes Maven plugin fail the build if the computed mutation score is below this threshold. In effect this would tell that the last changes have introduced tests that are of lowering quality than existing tests (in average) and that the new tests need to be improved to the level of the others.

In order for this strategy to be implementable we need PIT/Descartes to implement the following enhancements requests first:

- Threshold check to prevent build in Maven

- Handle multimodule projects

- Improve efficiency. Even though this one is very important so that developers can run it as part of the build locally before pushing their commits, the PIT/Descartes Maven plugin could be executed on the CI. But even for that to be possible, I believe that the execution speed needs to be improved substantially.

I'm eagerly waiting for this issues to be fixed in order to try this strategy on the XWiki project and verify it can work in practice. There are some reason why it couldn't work such as being too painful and not being easy enough to identify test problems and fix them.

WDYT? Do you see this as possibly working?